- #Opencl benchmark ucsd drivers

- #Opencl benchmark ucsd driver

- #Opencl benchmark ucsd full

- #Opencl benchmark ucsd simulator

The auto-generated hybrid codes hide the overhead of various data motion by overlapping them with computation.

#Opencl benchmark ucsd full

The most distinctive feature of the compiler is its capability to generate hybrid MPI + CUDA + OpenMP code that uses concurrent CPU + GPU computing to unleash the full potential of powerful GPU clusters. Annotated with a small number of directives, sequential stencil C codes can be automatically parallelized for large-scale GPU clusters. Our framework consists of a simple directive-based programming model and a tightly integrated source-to-source compiler. We present a new compiler framework for truly heterogeneous 3D stencil computation on GPU clusters. Panda: A Compiler Framework for Concurrent CPU+GPU Execution of 3D Stencil Computations on GPU-accelerated Supercomputers. Sourouri, Mohammed Scott, Baden & Cai, Xing The uncovered good programming practices can be used by computational scientists who want to adopt similar heterogeneous hardware platforms for a wide variety of applications.

#Opencl benchmark ucsd simulator

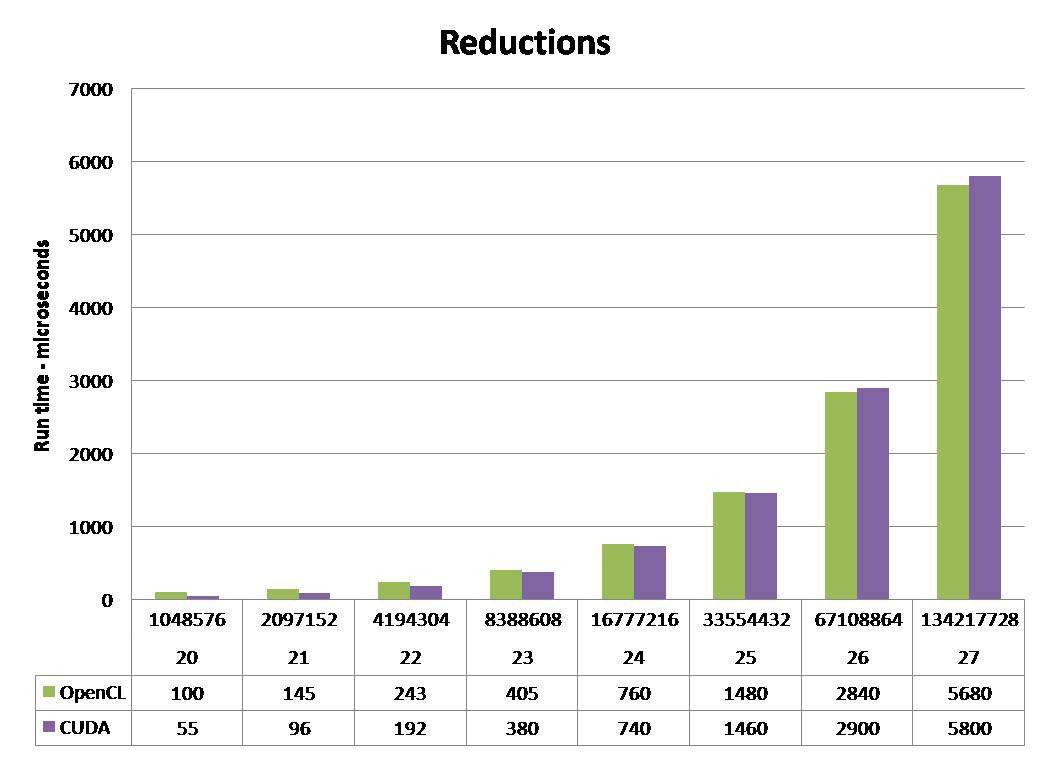

Numerical experiments show that good resource utilization is indeed achieved and that such a heterogeneous simulator paves the way for ultimately understanding the mechanisms of arrhythmia. Moreover, in addition to combined OpenMP+MPI programming, a suitable division of the cells between the CPUs and Xeon Phis is important for resource-efficient usage of an entire heterogeneous system. Although SIMD code vectorization is the main theme of performance programming, the actual implementation details differ considerably between CPU and Xeon Phi. In order to attain resource-efficient use of heterogeneous computing systems that consist of both CPUs and Xeon Phis, we first direct the coding effort at ensuring good performance on the two types of compute devices individually. In particular, 3D tissues of the human cardiac ventricle are studied with a physiologically realistic model that has 10,000 calcium release units per cell and 100 ryanodine receptors per release unit, together with tissue-scale simulations of the electrical activity and calcium handling. We investigate heterogeneous computing, which involves both multicore CPUs and manycore Xeon Phi coprocessors, as a new strategy for computational cardiology. International journal of parallel programming. Larger problem give more precise measurements on average.įeel free to use and share it if you find it useful.Langguth, Johannes Lan, Qiang Gaur, Namit & Cai, XingĪccelerating Detailed Tissue-Scale 3D Cardiac Simulations Using Heterogeneous CPU-Xeon Phi Computing. If you are planning to use a weaker GPU you might want to decrease it. The problem size that can be adjusted is the number of workgroups (I named it ‘Blocks’ as in CUDA). So far I’ve run it on AMD, Intel and NVIDIA platforms and several ATI/NVIDIA GPUs + Intel Sandy Bridge/Ivy Bridge CPUs.

#Opencl benchmark ucsd driver

The GPU driver crashes sometimes because of that, it would be good to avoid that.Īnd test it. Is there any way of getting information about that in advance - before actually running a kernel?

mobile ones) I get an error indicating that it is out of the resources. The second one is related to OpenCL - Sometimes when I run the program on weaker GPUs (i.e.

#Opencl benchmark ucsd drivers

So here’s my first question - has anyone succeeded having OpenCL capable GeForce/TESLA and Radeon in Linux? I’ve noticed that the drivers do something with the kernel, hence the problem. I programmed it under Windows as I couldn’t make NVIDIA and ATI GPUs work together in Linux. It is based on a simple FMAD code to maximize the throughput. I wanted to check how the datatypes affect the performance.īasically I just ported my older code from CUDA in order to compare the results as I am considering OpenCL as an alternative. I wrote a simple application for my purposes to measure the peak FLOP/s of a device in OpenCL.

0 kommentar(er)

0 kommentar(er)